부스트캠프 AI Tech 2기/Precourse

Softmax Classification

모플로

2021. 8. 1. 17:20

반응형

Softmax Classification

- Discrete Probability Distribution: 이산적인 확률분포

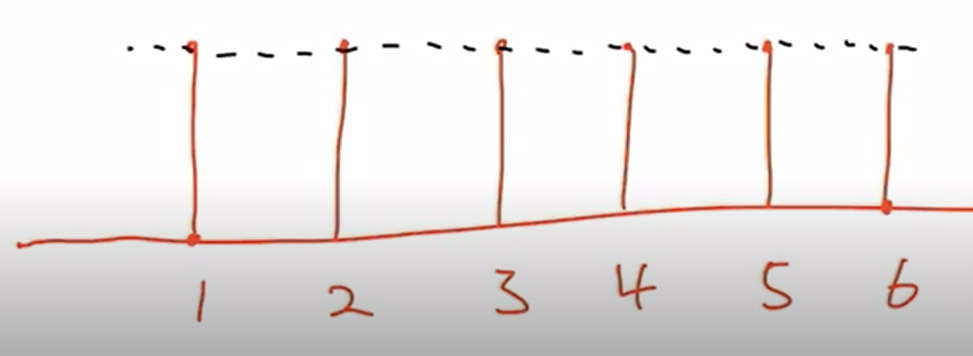

- 주사위 이산확률분포의 PMF는 아래와 같음

(이미지출처: Boostcourse ai tech pre course)

- 주사위 이산확률분포의 PMF는 아래와 같음

Softmax

- 내가 가위를 냈을 때 상대방이 무언가를 낼 확률

- P(주먹|가위) = ?

- P(가위|가위) = ?

- P(보|가위) = ?

- 합쳐서 1이되는 값으로 변환하여 줌

z = torch.FloatTensor([1,2,3])

hypothesis = F.softmax(z,dim=0)

hypothesis

->

tensor([0.0900, 0.2447, 0.6652])

hypothesis.sum()

->

tensor(1.)Cross Entropy Loss

z = torch.rand(3,5, requires_grad=True)

hypothesis = F.softmax(z,dim=1)

print(hypothesis)

->

tensor([[0.2128, 0.2974, 0.2574, 0.1173, 0.1150],

[0.1486, 0.2933, 0.1740, 0.2342, 0.1499],

[0.2880, 0.1427, 0.2565, 0.1774, 0.1355]], grad_fn=<SoftmaxBackward>)

# y는 인덱스값

y = torch.randint(5,(3,)).long()

y

-> tensor([4, 2, 3])

y_one_hot = torch.zeros_like(hypothesis)

# |y| = (3,) ([4,2,3])

# |y.unsqueeze(1)| ->(3,1) ([[4],[2],[3]])

y_one_hot.scatter_(1, y.unsqueeze(1),1)

cost = (y_one_hot*-torch.log(hypothesis)).sum(dim=1).mean()

print(cost)

->

tensor(1.8803, grad_fn=<MeanBackward0>)torch에서 위의 식을 간단하게 정리해줌

- Softmax

# 두개가 같은결과가 나옴

# 1. Lowlevel

torch.log(F.softmax(z,dim=1)

# 2. Highlevel

F.log_softmax(z,dim=1)

->

tensor([[-1.5474, -1.2128, -1.3570, -2.1426, -2.1624],

[-1.9067, -1.2264, -1.7488, -1.4515, -1.8979],

[-1.2449, -1.9471, -1.3606, -1.7296, -1.9989]],

grad_fn=<LogSoftmaxBackward>)

- Cross-entropy Loss

# 1. Low level

(y_one_hot * -torch.log(F.softmax(z,dim=1))).sum(dim=1).mean()

# 2. High level

F.nll_loss(F.log_softmax(z,dim=1),y)

# 3. cross_entropy function

F.cross_entropy(z,y)

->

tensor(1.8803, grad_fn=<NllLossBackward>)

예제) Training with Low-level Cross Entropy loss

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

# 항상 같은 결과를 주기위해 seed

torch.manual_seed(1)

x_train = [[1,2,1,1],[2,1,3,2],[3,1,3,4],[4,1,5,5],[1,7,5,5],[1,2,5,6],[1,6,6,6],[1,7,7,7]]

y_train = [2,2,2,1,1,1,0,0]

x_train = torch.FloatTensor(x_train)

y_train = torch.LongTensor(y_train)

class SoftmaxClassifierModel(nn.Module):

def __init__(self):

super().__init__()

self.linear = nn.Linear(4,3)

def forward(self,x):

return self.linear(x)

model = SoftmaxClassifierModel()

optimizer = optim.SGD(model.parameters(), lr=0.1)

nb_epochs = 1000

for epoch in range(nb_epochs+1):

prediction = model(x_train)

cost = F.cross_entropy(prediction, y_train)

optimizer.zero_grad()

cost.backward()

optimizer.step()

if epoch % 100 ==0:

print(f'epoch: {epoch} cost: {cost.item()}')

->

epoch: 0 cost: 1.6167852878570557

epoch: 100 cost: 0.6588907837867737

epoch: 200 cost: 0.5734434723854065

epoch: 300 cost: 0.5181514024734497

epoch: 400 cost: 0.47326546907424927

epoch: 500 cost: 0.4335159659385681

epoch: 600 cost: 0.3965628743171692

epoch: 700 cost: 0.36091411113739014

epoch: 800 cost: 0.3253922164440155

epoch: 900 cost: 0.28917840123176575

epoch: 1000 cost: 0.25414788722991943반응형